The Robotic Revolution: How AI is Bridging the Gap Between Digital and Physical Worlds

Ready to meet your new coworker... the humanoid robot? Discover how industry giants are paving the way for a hybrid society of humans and advanced Robotic AI

Hello, fellow tech visionaries and AI enthusiasts! And welcome to another visionary episode of Tech Trendsetters, where we learn and explore how the cutting-edge technology and innovations shaping our technological landscape.

We've made incredible strides in AI, creating algorithms that can already outthink humans in specific domains. But what happens when we give these brilliant minds bodies to interact with the physical world? Imagine, for a moment, a world where future Artificial General Intelligence (AGI) isn't just a concept confined to computers and cloud, but a tangible, physical presence in our daily lives. We're standing on the precipice of another revolution that could see humanity become the architects of a new form of intelligent life.

Today, you’ll know about the challenges that have held us back from creating androids in the truest sense – machines that can think, learn, and interact with our world as seamlessly as we do. We'll explore the latest developments in this field, fueled by millions of dollars flowing not just into AI, but into the creation of humanoid robots. And these aren't just any robots. They're machines designed to mirror our own form, structure and appearance. Machines with the potential to match and even surpass human capabilities across a wide range of physical-world tasks.

The Promise and Challenges of Humanoid Robots

Let’s start by imagining a world where robots walk among us, not as clunky machines but as agile humanoids capable of complex tasks. This vision has captivated scientists, engineers, and dreamers for decades. However, despite rapid advancements in AI, the robotic revolution hasn't materialized as expected. The reasons behind this are more complex than they might seem.

Since my early days in AI research, I've been fascinated by the potential of robotics. The idea of creating intelligent machines that can seamlessly interact with our physical world is profoundly exciting. Yet, as Ilya Sutskever, OpenAI's Chief Scientist, explains, the journey to this vision has been anything but straightforward.

Why OpenAI Gave Up on AI-Robots

"Back then," Sutskever reflects, "it really wasn't possible to continue working in robotics because there was so little data." This statement highlights a fundamental challenge in robotics. In the AI world, data is the fuel that powers machine learning models, enabling them to learn, adapt, and improve. But gathering sufficient data for physical robots, which require significant resources to build and maintain, has always been a major hurdle.

The issue, as Sutskever describes, was one of scale. "If you wanted to work on robotics," he says, "you needed to become a robotics company." This entailed not only developing AI algorithms but also diving into hardware manufacturing, maintenance, and operations. Even with significant investment, the data collection payoff was limited. "Even if you have 100 robots," Sutskever notes, "it's a giant operation, but you won't get that much data out of them."

In essence, it was a data scarcity issue. Without enough data, developing truly intelligent and capable robots was impossible. Conversely, without capable robots, gathering the necessary data was challenging. This classic chicken-and-egg scenario has postponed progress in robotics for years.

The Essence of the Expected Breakthrough

A significant part of the anticipated breakthrough in humanoid robotics is resolving the Moravec paradox, a major barrier to achieving humanoid Artificial General Intelligence (AGI) and eventually Super-AI.

The Moravec paradox, named after roboticist Hans Moravec, suggests that it is harder to program a robot to perform simple, everyday tasks than complex, high-level reasoning. The paradox lies in the fact that sensorimotor skills (like walking or holding a paper cup, without crashing it) are more computationally demanding than high-level cognitive tasks.

The logic here is simple:

To reach the true AGI level, an intelligent agent must have a body. This body allows the agent to "live," adapting to the external environment and interacting with it and its own kind;

However, Moravec's paradox stands in the way. Managing low-level sensorimotor operations (body operations) requires enormous computational resources, even greater than controlling high-cognitive processes (complex mental operations of the brain);

Essentially, without a humanoid body (robot – android), there can be no materialized AGI, but the android does not yet have sufficient computing resources to "live" and perform these operations efficiently.

However, are we now on the cusp of a robotic revolution? The potential certainly exists. As of 2024, the landscape is changing. With AI gaining significant traction, a path forward seems more achievable. In next years, or even this year, we will witness a transformative period where advancements in AI could finally pave the way for the robotic future we've long envisioned.

The Companies Betting Big on Humanoid Robots

When we talk about the future of humanoid robotics, it's not just about the technology – it's about the visionaries and companies pouring resources into making these dreams a reality. Let's take a closer look at some of the major players that are shaping the future of robotics.

The race to create the next generation of humanoid robots is already up and you might be surprised to learn just how much capital is flowing into this sector. Here's a rundown of some of the key companies to watch, from big to small:

UBTECH Robotics has emerged as a major force, raising nearly $940 million over four funding rounds. Now listed on the Hong Kong Stock Exchange, they've attracted investments from tech behemoths like Tencent. What does this level of investment tell us about the potential they see in humanoid robots?

Figure AI is a relative newcomer, but they've made waves with a recent $675 million Series B funding round, valuing the company at a staggering $2.6 billion. What innovations are they bringing to the table that have investors so excited?

Boston Dynamics, a name synonymous with cutting-edge robotics, was acquired by Hyundai Motor Group for about $880 million in 2021. With Hyundai holding an 80% stake and SoftBank retaining 20%, how might this blend of automotive and tech expertise drive innovation?

Speaking of SoftBank, their robotics arm has raised $263 million and is known for Pepper, the humanoid robot that captured public imagination in 2014. How has their approach evolved since then?

And let's not forget Tesla. While they haven't disclosed specific investment figures for their Optimus humanoid robot project, with a market cap of around $580 billion, they certainly have the resources to make waves in this field.

Agility Robotics is another significant player, having raised $150 million in a Series B round led by DCVC and Playground Global. With the Amazon Industrial Innovation Fund also joining as an investor, Agility is focusing on robots designed to work alongside people in logistics and warehouse environments.

1X Technologies (formerly Halodi Robotics) has recently joined the fray with a $100 million Series B funding round. Their NEO android, designed for household tasks, weighs just 30 kg and stands at 167 cm tall. Partnership with OpenAI for embodied learning makes this company even more interesting. But what sets NEO apart is its focus on being inherently safe and suitable for consumer environments.

But here's the million-dollar question remains: Are we on the verge of a breakthrough, or are we witnessing another hype cycle?

The amount of money pouring into this field suggests that some very smart people believe the former. Yet, as we've seen, the challenges in robotics are as much about hardware and real-world interaction as they are about AI and algorithms.

How DeepMind Merges AI Vision and Robotics Reality

Above, we explored the companies pouring resources into the development of humanoid robots. Now, it’s time to understand the technical details that connect these impressive robots and their capabilities to the underlying technology. Understanding the technology behind the scenes can help us appreciate how exactly these robots perform complex tasks and what innovations are driving this progress.

While companies like Figure AI and 1X Technologies are making waves with their humanoid robots, Google DeepMind is taking a different approach. Their focus isn't exactly on creating new robotic bodies, but on developing the artificial minds that could power a wide range of robots. This shift from visionary concepts to concrete technological advancements showcases the scale of research and development happening in the field.

DeepMind's recent blog post, "Shaping the future of advanced robotics," unveils three groundbreaking works set to form the foundation of their next-generation robotic AI. We'll explore two of these innovations: RT-2 and AutoRT.

RT-2: The Language of Robotic Control

RT-2, or Robotics Transformer 2, represents a paradigm shift in robotic control. The key insight behind RT-2 is both simple and powerful: large language models (LLMs) can generate any sequence of tokens, not just natural language. This capability can be leveraged for robotic control.

The researchers at DeepMind realized that robot actions could be encoded as another form of language. By training their models to "speak" this language of robotic control, they've created systems that seamlessly bridge the gap between high-level understanding and low-level action.

Here's how it works: RT-2 is trained to generate strings of numbers that encode robot actions. For example, the string "3 321 191 431 8 332 113" might translate to:

Continue the task (indicated by the first "3");

Move the robot arm to coordinates (321, 191, 431) in 3D space;

Rotate the arm by (8, 332, 113) degrees along its axes.

This encoding allows RT-2 to control a robot with 6 degrees of freedom, while leveraging the vast knowledge and reasoning capabilities of large vision-language models trained on internet-scale data.

RT-2 is built upon two powerful vision-language models: PaLI-X and PaLM-E. These models, with parameters ranging from 3 billion to 55 billion, are co-fine-tuned on both robotic trajectory data and internet-scale vision-language tasks. This co-fine-tuning process is crucial, as it allows RT-2 to maintain its broad knowledge while adapting to robotic control tasks.

The results are impressive – RT-2 demonstrates an unprecedented ability to generalize to new situations, objects, and environments. In tests involving unseen scenarios, RT-2 achieved success rates more than twice as high as previous state-of-the-art models. For instance, on tasks involving unseen objects, backgrounds, and environments, RT-2 models achieved an average success rate of 62%, compared to 32-35% for the next best baselines.

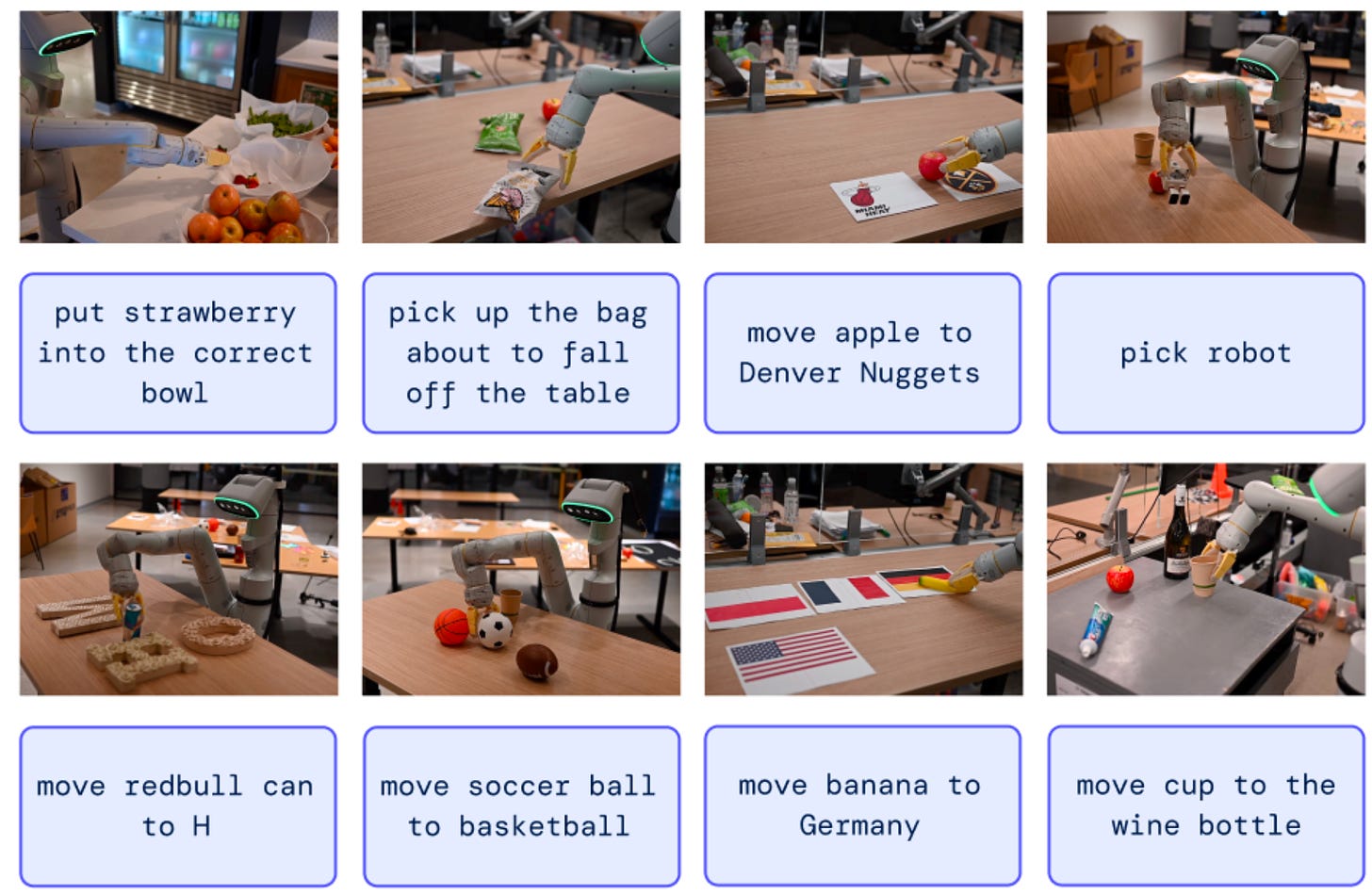

Perhaps most intriguing are the emergent capabilities that RT-2 exhibits. Without explicit training, the model shows an ability to reason about object relations, interpret abstract symbols, and even understand basic physics. For example, RT-2 can correctly interpret instructions like "put the strawberry in the correct bowl" by recognizing that the strawberry should go with other fruits, or "pick up the bag about to fall off the table" by understanding physical dynamics.

AutoRT: Scaling Up Robotic Learning

While RT-2 focuses on enhancing the capabilities of individual robots, AutoRT tackles another crucial challenge: how to efficiently train and deploy large fleets of robots in new environments.

AutoRT is a system that leverages existing AI models to automate the process of robot deployment and data collection. Here's how it works:

Robots equipped with cameras explore their environment;

A Vision-Language Model (VLM) generates descriptions of the spaces the robots encounter;

A Large Language Model (LLM) generates potential tasks based on these descriptions;

Another LLM breaks these tasks down into step-by-step instructions;

A "critic" LLM, guided by a set of safety rules (including a modern interpretation of Asimov's Laws), evaluates and filters these tasks;

Approved tasks are sent to the robots for execution;

The resulting data is collected and used for further training;

This way, AutoRT is about scaling up robot learning to an unprecedented level. In DeepMind's experiments, they deployed up to 20 robots simultaneously, collecting data from 77,000 robotic trials across 6,650 unique tasks. This scale of data collection was previously unseen of in robotics research.

The system also provides for human intervention, allowing for review of a small portion of tasks (around 10%). This ensures safety and quality control while still maintaining high levels of autonomy.

One of the most intriguing aspects of AutoRT is its use of what the team calls a "robot constitution" – a set of rules that govern the robot's behavior and ensure safety. This constitution includes not only basic safety guidelines but also ethical considerations, helping to address some of the concerns surrounding autonomous AI systems. For instance, it includes rules inspired by Asimov's Laws of Robotics, as well as specific constraints like the robot having only one arm, which helps the system avoid generating tasks that require two-handed operations.

Challenges and Possibilities

Despite the impressive advances represented by RT-2 and AutoRT, significant challenges remain. Here are some specific issues the researchers are grappling with:

Physical Skill Limitations

While RT-2 shows remarkable generalization in terms of understanding and applying knowledge, its physical capabilities are still constrained by its training data. It cannot spontaneously learn new physical actions that weren't part of its training. For example, if RT-2 wasn't trained on juggling data, it can't suddenly learn to juggle, no matter how well it understands the concept.Computational Demands

The sheer size of RT-2 (up to 55 billion parameters) presents significant computational challenges for real-time control. The researchers had to develop a cloud-based solution using multi-TPU setups to achieve control frequencies of 1-3 Hz for the largest model. The smaller 5 billion parameter version can run at about 5 Hz, which is more practical for many robotic applications but may still be too slow for some high-speed tasks.Data Collection at Scale

AutoRT aims to solve this, but collecting diverse, high-quality data from real-world robot interactions remains a significant challenge. Ensuring safety and ethical considerations during this process adds another layer of complexity. The team had to develop sophisticated safety protocols, including physical deactivation switches and constant human supervision.Robustness and Safety

As these systems become more autonomous, ensuring their robustness in unpredictable real-world scenarios and maintaining safety standards becomes increasingly critical. The "robot constitution" is a step in this direction, but it's an ongoing area of research and development.

Looking to the future, the DeepMind team is focusing on several key areas:

The next iteration of RT – RT-3, aims to train on tens of thousands of diverse tasks, potentially leading to qualitative leaps in capabilities. This is based on their observation that dramatically increasing the variety of training tasks can lead to significant improvements in a model's abilities.

Researchers are working on methods to enhance the model's ability to generalize across even more diverse scenarios and tasks. This includes improving the model's understanding of physical interactions and its ability to reason about novel situations.

Developing methods to run these large models more efficiently, potentially on-device, is a priority. This could involve techniques like model distillation or new hardware solutions.

Refining the "robot constitution" and developing more sophisticated ethical reasoning capabilities is an ongoing focus. This includes not just safety considerations, but also issues of privacy, fairness, and societal impact.

The team is exploring ways to incorporate more sophisticated chain-of-thought reasoning capabilities, similar to the chain-of-thought prompting used in language models. This could allow robots to break down complex tasks into simpler steps and explain their decision-making process.

According to DeepMind projections, the robotics revolution is here, and it's speaking the language of AI. We're seeing the first steps towards robots that can not only perform tasks, but understand the world in ways that are increasingly similar to human cognition. While there are still significant challenges to overcome, the progress being made is undeniably exciting.

From Language to Locomotion: DeepMind's Next Leap in Robotics

As we got more familiar with DeepMind's groundbreaking work with RT-2 and AutoRT, the robotics research do not stop just there. A new approach is emerging that draws even deeper parallels between language modeling and robotic control. This innovative method, dubbed "Humanoid Locomotion as Next Token Prediction," represents a fascinating convergence of natural language processing techniques and robotic control.

The Core Idea: Treating Movement as Language

At the heart of this new approach, as with RT-2, lies the same simple idea: what if we treated robot movements the same way we treat words in a sentence? Just as language models predict the next word in a sequence, this method aims to predict the next movement in a robot's trajectory.

Researchers explain that they are essentially teaching robots to "speak" the language of movement. By framing locomotion as a next token prediction problem, they can leverage the same powerful techniques that have revolutionized natural language processing.

What is Locomotion?

Locomotion refers to the movement or the ability to move from one place to another. In robotics, it involves creating smooth, adaptive movements that allow a robot to navigate through its environment, similar to how humans walk or run.

This approach allows researchers to tap into the vast potential of transformer models, which have shown remarkable capabilities in language understanding and generation. By applying these models to robotic control, the team hopes to achieve more fluid, adaptive, and human-like movement in humanoid robots.

The Fuel for Learning

One of the most exciting aspects of this approach is its ability to learn from diverse data sources. The research team collected data from four main sources:

Neural network policies: These provide complete trajectories with both observations and actions.

Model-based controllers: These offer trajectories without actions, simulating scenarios where we might have partial information.

Human motion capture: This data doesn't contain actions and requires retargeting to the robot's morphology.

YouTube videos: Perhaps most intriguingly, the team used computer vision techniques to extract human movements from internet videos, then retargeted these to the robot.

This diversity of data sources is crucial. It allows models to learn from a wide range of movements and scenarios, including those that would be impractical or impossible to program explicitly. The ability to learn from incomplete data is particularly noteworthy, opening up the possibility of using vast amounts of human movement data available online to create more natural and varied robot movements.

Model Architecture: Transformers for Movement

The model itself is a vanilla transformer, similar to those used in large language models. However, instead of processing words, it processes tokens representing the robot's state and actions at each timestep. The model is trained to predict the next token in the sequence, effectively learning to anticipate the robot's next move based on its current state and past actions.

One key innovation is the use of "modality-aligned prediction." This means that when predicting the next token, the model focuses on predicting observations from past observations, and actions from past actions. This alignment helps maintain the logical structure of the robot's movements and improves overall performance.

Real-World Results: Walking in San Francisco

The true test of any robotics model is its performance in the real world. In a striking demonstration of the model's capabilities, the team deployed their humanoid robot in various locations around San Francisco over the course of a week. From concrete sidewalks to tiled plazas and even dirt roads, the robot demonstrated stable and fluid locomotion.

This real-world performance is particularly impressive given the challenges of urban environments. Unlike controlled laboratory settings, city streets are unpredictable, crowded, and unforgiving. The robot's ability to navigate these spaces speaks to the robustness of the model.

Despite the promising results, several challenges still remain. The computational demands of running large transformer models in real-time for robot control are significant. Another challenge is the gap between simulated environments, where much of the training data is generated, and the real world. While the model has shown impressive generalization capabilities, bridging this sim-to-real gap remains an ongoing area of research.

Figure AI's First Commercial Deployment

After exploring the theoretical advancements and potential of humanoid robots, we now turn to a real-world application where these technologies are being put into practice. Figure AI has taken a significant leap forward, turning potential into reality. Just two weeks ago, they shipped their first humanoid robots to a commercial client, marking a pivotal moment in the industry.

Initially, they kept the identity of their lucky client under wraps, but today we can reveal that a small fleet of these robots has landed at BMW's manufacturing plant in Spartanburg, South Carolina.

This isn't just a tech demo or a PR stunt – it's the beginning of a carefully planned integration of humanoid robots into real-world manufacturing processes. The first phase of this groundbreaking agreement is already underway, with Figure AI's team working to determine initial options for using robots in automotive production. They're taking a methodical approach: assessing the plant, prioritizing projects, and starting to collect data. The long-term goal – to gradually replace manual labor in specific tasks.

A Glimpse into the Future of Manufacturing

To give you a sense of what these robots are capable of, Figure AI has released a video showcasing their humanoid in action. In just 90 seconds, we see the robot performing a task that's can be both simple and not: transferring four parts from one rack to another with high precision, ensuring that pins fit into their designated holes. The stated accuracy for manipulating parts is 1-3 cm, which might be sufficient for some tasks, though we can certainly expect improvements in the future.

Now, you might be wondering, "What makes this so special?" Let's break down why this demonstration is significant:

Remember Figure AI's partnership with OpenAI that we mentioned earlier? This is where it starts to pay dividends. With access to OpenAI's expertise and cutting-edge models, Figure AI is positioned at the forefront of merging advanced AI with robotics.

Unlike traditional industrial robots that require precise programming for each movement, Figure AI's robots are controlled by an end-to-end neural network. The input is pixels from camera images, and the output is actions with all limbs. There are no intermediate steps that need to be programmed manually. This approach essentially removes humans from the process of scaling skills, relying instead on data.

As far as I understand, the neural network was trained in movement in a simulation, not in a specific factory. However, the data for completing specific tasks was collected on-site. This isn't a problem – after all, the work they want to automate is done by people every day, and collecting demonstrations from them is a solvable task.

In the video, we see something truly remarkable – the robot correcting its own mistake when a part doesn't fit correctly. In theory, this can be learned without demonstrations (by comparing the "need" and "as is" states), but most likely, people performed similar corrective actions during the training process.

The Road Ahead: Challenges and Opportunities

It's important to note that progress in this field is not gradual, but rather step-wise. Up to a certain level, such robots are absolutely useless (as we've observed over the past 10+ years), but from a certain level, they can suddenly perform many tasks at once because these tasks require largely similar skills.

While it's difficult to give a specific forecast, we can make some educated guesses about how this technology might roll out:

Initially, we'll likely see these robots tackle 2-3 specific tasks;

As they prove their worth and continue to learn, that number might grow to 5-10 tasks – which would be enough to gradually start replacing manual labor workers in some areas;

Eventually, we could see them handling around 20 different tasks – that's the point when robots may become normalized in our everyday lives, and having one at home won't be something out of the ordinary;

In the long term, we might see most manual workers having a robotic counterpart during their shifts, not just in plants but possibly even in regular white-collar offices.

Of course, this transition won't be without its challenges. As these robots become more capable, we'll need to navigate complex regulatory landscapes and potential pushback from labor unions. The key will be finding a balance that harnesses the efficiency and capabilities of these robots while ensuring a just transition for human workers.

As we continue to follow developments in this exciting field, one thing is clear: the robots are here, and they're learning fast. The future of manufacturing is being shaped today, by companies like Figure AI working in a collaboration with manufacturing giants like BMW.

The Titans of Robotics: Boston Dynamics, Tesla, and Nvidia

As robotics technology advances, it's important to recognize the leading companies that are driving innovation in the field. In this chapter, we'll explore the latest developments from three key players: Boston Dynamics, Tesla, and Nvidia. Each of these companies brings a unique approach to the field, showcasing the diverse paths being taken in the pursuit of advanced robotics.

Boston Dynamics: Farewell to an Icon

Boston Dynamics has long been at the forefront of robotics, captivating the world with their impressive demonstrations of robot agility and resilience. Recently, they made waves with a video titled "Farewell to HD Atlas," marking the end of an era for one of their most iconic creations.

Atlas, the humanoid robot that has been the star of countless viral videos, has undergone years of public testing and development. We've seen Atlas kicked, punched, knocked over with sticks, and forced to perform impressive feats like running and somersaults. These demonstrations weren't just for show – they represented crucial stress tests and learning opportunities for the development team.

The farewell video is a testament to the journey of robotics development. It showcases not just the triumphs, but also the failures and setbacks that are an integral part of innovation. Moments where Atlas stumbles or falls remind us of the immense challenges in creating a bipedal robot that can navigate the real world with human-like agility.

While some might chuckle at these "failures," seeing them as proof of the limitations of robotics, they actually highlight the remarkable progress made over the years. Each fall and recovery represents valuable data and insights that have driven improvements in balance, motion planning, and adaptive control.

The retirement of the HD Atlas platform likely signals an exciting new chapter for Boston Dynamics. Industry insiders are speculating about what might come next – perhaps a new humanoid design, or maybe a shift towards a different form factor altogether. Whatever the case, Boston Dynamics has consistently surprised and impressed us, and their next reveal is sure to be noteworthy.

Tesla: Optimus Takes Shape

While Boston Dynamics bids farewell to Atlas, Tesla continues to forge ahead with its own humanoid robot project, Optimus. Elon Musk's ambitious vision for a general-purpose humanoid robot has been met with both excitement and skepticism since its announcement.

Tesla's approach leverages their expertise in AI, particularly in computer vision and neural networks developed for their autonomous driving technology. The company believes that the large-scale data collection and processing capabilities they've built for their vehicles can be adapted to create a versatile robotic platform.

Recent updates on Optimus have shown steady progress, with demonstrations of improved dexterity and motion control. Tesla's goal is to create a robot that can perform a wide range of tasks in both industrial and domestic settings, potentially revolutionizing manufacturing and home assistance.

However, Tesla faces significant challenges. Unlike Boston Dynamics, which has decades of experience in robotics, Tesla is relatively new to the field. They're betting on their AI expertise and manufacturing capabilities to close the gap quickly, but creating a reliable, safe, and truly useful humanoid robot is still an enormously complex task.

Nvidia: Project GR00T and the Future of Robot Brains

While Boston Dynamics and Tesla focus on the physical aspects of robotics, Nvidia is making significant strides in the computational side of things. At a recent event, Nvidia's CEO Jensen Huang unveiled Project GR00T (Generalist Robot 00 Technology), positioning it as a foundation model for future robots.

GR00T is designed to be a general-purpose AI model that can power everything from simple manipulators to complex humanoid robots. Its key features include:

Multimodal data processing

GR00T can handle various inputs including video, text, and sensor data, allowing robots to perceive and understand their environment in multiple ways.Natural language interaction

The integration of large language models (LLMs) enables voice-based communication with robots, making human-robot interaction more intuitive.Learning by observation

GR00T has the capability to learn and emulate actions by observing human demonstrations, potentially speeding up the training process for new tasks.

To support GR00T and address the data scarcity issue in robotics, Nvidia has introduced an updated Isaac Lab. This simulated environment allows for reinforcement learning training that closely mimics real-world conditions, enabling the collection of vast amounts of virtual training data.

Nvidia also unveiled the Jetson Thor, a mobile GPU chip designed specifically for robot control. With 800 TFlops of processing power in FP8, it aims to provide the computational muscle needed for running complex AI models with minimal latency, the solution to a problem DeepMind faced.

The Convergence of Hardware and Software

This synergy between hardware advancements and software innovation is crucial for the future of robotics. Ilya Sutskever, co-founder and chief scientist of OpenAI, highlighted in a recent interview that one of the main obstacles to progress in robotics has been the lack of data. As mentioned earlier in the episode, – "if you wanted to work on robotics, you needed to become a robotics company." This required a large team dedicated to building and maintaining robots, posing a significant barrier to entry.

However, with advancements like Nvidia's Isaac Lab and the increasing availability of robotic platforms, we're probably entering an era where it's becoming possible to collect vast amounts of robotic data without necessarily building hundreds of physical robots. This could obviously democratize robotics research and accelerate progress in the field.

The Dawn of a Hybrid Society

Our intelligence evolved over millions of years through cognitive evolution, transforming humans from animals into superintelligent beings capable of building civilizations and exploring space. This journey, shaped by physical interactions with our environment and social learning from our peers, has given us a unique embodied understanding of the world.

In contrast, AI intelligence is the product of machine learning on vast amounts of digital data, initially lacking the evolutionary context and embodied experience of human cognition. The journey from ChatGPT's release in 2022 to the current state of robotics represents more than just technological progress – it's a serious shift in how we perceive and interact with intelligent machines.

While early AI systems excelled in digital space, they lacked the physical understanding that comes from interacting with the real world. Now, with advances in humanoid robotics, we're bridging that gap, creating entities that can not only think, but also act in ways that mirror and potentially surpass human capabilities.

A pivotal moment in this journey occurred in November 2023 when Google DeepMind introduced a groundbreaking approach to bridge this fundamental gap: the concept of cognitive evolution for AI through social learning. By mimicking the way humans learn from each other, AI can evolve in a similar manner, potentially accelerating its development in unprecedented ways.

To fully grasp the implications of this revolution, let's recap the key aspects that are shaping the future of robotics:

Social learning as the engine of cognitive evolution:

Inspired by human social learning, AI agents can acquire skills and knowledge by copying other intelligent agents, including humans and other AIs.

This method allows for rapid and efficient learning, comparable to human social learning.

Reinforcement learning for cultural transmission:

Researchers used reinforcement learning to train AI agents to identify new experts, imitate their behavior, and retain learned knowledge within minutes.

This breakthrough enables real-time imitation of human actions without relying on pre-existing human data.

Overcoming fundamental challenges:

Moravec paradox: High-level cognitive processes require relatively few calculations, whereas low-level sensorimotor operations need vast computational resources. The new approach addresses this paradox by enabling efficient learning and execution of sensorimotor tasks.

Machine learning from tacit knowledge: The challenge of reverse-engineering skills acquired through tacit knowledge transfer – non-verbalized and often unconscious – can be met by AI through advanced social learning techniques.

Solving these problems opens the door to creating true "Humanoid AI" within android robots, bringing us closer to a hybrid society of humans and androids, a vision often depicted in science fiction but previously considered unattainable in the near term.

It’s All About Future

As we look to the future, the possibility of superintelligent AI inhabiting advanced robotic bodies presents both exciting opportunities and existential risks.

We might be a few years away from creating metal creatures that possess not just superhuman intelligence but also physical capabilities far beyond our own. These beings could revolutionize fields like space exploration, disaster response, and medical care, performing tasks in environments hostile to human life or requiring precision beyond human ability.

But at the same time they can pose significant safety threats for entire humanity.

Would a superintelligent android, with its vast knowledge and physical power, be considered a new form of life? What happens when they meet their "creators" and realize that we fall short of their ideals of efficiency, rationality, or even morality? These questions push us to reconsider our understanding of consciousness, identity, and our place in the universe.

As always, thank you, for joining me on this exciting journey through the cutting edge of AI and robotics.

The decisions we make today will determine whether this hybrid society becomes an utopia of human-AI collaboration or a dystopia of human-AI war.

Stay informed, ask critical questions, and participate in the ongoing dialogue about the future we want to create. Until next time!

🔎 Explore more:

AutoRT: Embodied Foundation Models for Large Scale Orchestration of Robotic Agents

Figure Raises $675M at $2.6B Valuation and Signs Collaboration Agreement with OpenAI

Bonus Track: The Prophecy of "I, Robot"

When "I, Robot" hit theaters in 2004, it seemed like a far-fetched vision of the future. Yet, two decades later, we can now see that it offered a very good glimpse into our future reality.

The film's depiction of humanoid robots integrated into society, from domestic helpers to industrial workers, mirrors the current developments we're witnessing with companies like Figure AI and Google's research papers. It's as if Hollywood, knowingly or unknowingly, was preparing us for the robotic revolution that was to come.

The time we've spent engaging with these ideas through fiction has prepared us, at least conceptually, for their arrival. The line between science fiction and science fact is blurring. The future that once seemed distant is now unfolding before our eyes.